Load Testing TapConnect with Gatling

Mathijs den Burger

April 13, 2022

TapConnect is a barcode ticketing solution for public transport developed by Ximedes. We validated the performance requirements of TapConnect with Gatling, a popular load test tool.

Transport operators use TapConnect to define travel products, like a 1 hour card or a ticket from station A to station B. Customers obtain barcode tickets via sales channels like the GVB travel app and show these barcodes to validators - the devices which validate tickets - to access public transport.

TapConnect provides many features for public transport operators, such as a user interface for customer service, an app for inspecting barcodes, financial reports, real-time dashboards etc. In this blog post we will focus on the issuing and validation of barcode tickets.

When a validator has scanned a barcode it asks TapConnect if the ticket is valid. Such "online validation" can quickly detect fraud with copied barcodes and enables new product types like multi-ride tickets. But it does require high performance.

A validator waits at most 500 milliseconds for a response from TapConnect. When the response arrives in time the online decision is followed, otherwise the validator falls back to an offline decision based on the data encoded in the barcode itself. The final decision of the validator is always sent to TapConnect.

Performance requirements

TapConnect needs to be very responsive. For example, the Issuing Service that creates tickets and barcodes needs to:

- Respond within 1 second.

- Perform at least 1000 operations per minute.

The Validation Service that performs the online validation of barcode tickets has more demanding requirements:

- Validate a barcode within 500 milliseconds.

- Handle 3,400 validators that scan 25 barcodes per minute each, representing a very busy day in a city the size of Amsterdam.

- Handle 43,000 validators that together send 4,300,000 offline decisions within 2 hours, representing the "catchup" of all validators in a country like The Netherlands after a full day of downtime (like a huge AWS outage).

We validated the real-world performance of TapConnect with Gatling.

Gatling

Gatling is a load test tool based on code. A Gatling load test (called a "simulation") is written in a DSL that defines the scenarios of virtual users (the actions they perform) and the setup of those virtual users (how many users there are over time).

The TapConnect backend is entirely written in Kotlin. Since version 3.7 Gatling offers a Java version of its DSL that can also be used in Kotlin, so we could write our load tests in Kotlin too. Very nice!

The Gatling DSL supports both REST and WebSockets, making it a good fit for load testing the TapConnect APIs. The Issuing API uses REST while the Validation API is based on WebSockets.

Issuing load test

As an example, we'll create a load test for the TapConnect Issuing Service that handles REST calls related to travel products, tickets and barcodes. Our test will perform a typical user interaction with the Issuing REST API:

- list all products

- look at a detail page of a product

- create a ticket

- get a barcode for the ticket

The test should check that the Issuing Service can handle at least 1000 requests per second. The response time of each request should be less than 1 second.

We start with a simulation class:

class IssuingLoadTest : Simulation()Our test code will consist of three parts:

- set up a test fixture using internal REST endpoints

- perform the user actions described above

- tear down the test fixture and reset the system back to the original state using internal REST endpoints

Setup and tear down

Gatling offers before() and after() functions in the Simulation base class that can be overridden to handle setup

and tear down. However, we cannot use the Gatling DSL in those functions so doing REST calls in there is tricky.

A simpler alternative is to use three scenarios that are

executed sequentially

using andThen(). The skeleton of our load test will then look as follows:

class IssuingLoadTest : Simulation() {

val setUpFixture = scenario("Set Up")

val userActions = scenario("User Actions")

val tearDown = scenario("Tear Down")

init {

val testUser = atOnceUsers(1)

val traffic = constantUsersPerSec(4.0).during(Duration.ofMinutes(1))

setUp(

setUpFixture.injectOpen(testUser).andThen(

userActions.injectOpen(traffic).andThen(

tearDown.injectOpen(testUser)

)

)

).protocols(Configuration.httpProtocol)

}

}One testUser executes the setUpFixture and tearDown scenarios. In between the user actions are executed by virtual

users that arrive at a rate of 4 users per second during one minute. All scenarios use the same

HTTP protocol definition

that specifies, for example, the base URLs to use and the authentication mechanism.

Assertions

After a load test has run Gatling can verify that traffic statistics match certain assertions. In our case the assertions are:

- The total number of requests is at least 1000.

- The response time of all requests is less than 1 second.

- All requests succeed.

These assertions can be expressed as follows:

setUp(

// snip

).assertions(

global().allRequests().count().gte(1000),

global().responseTime().max().lte(1000),

global().successfulRequests().percent().shouldBe(100.0)

)Steps

So far the scenarios are empty. Let's fill them with steps to execute.

val setUpFixture = scenario("Setup").exec(

Setup.apiTokens,

Setup.salesCatalog,

Setup.issuingSettings

)

val userActions = scenario("User Actions").exec(

Issuing.listProducts,

pause(Duration.ofSeconds(1)),

Issuing.getProductDetails(ProductCode.OneHour),

pause(Duration.ofSeconds(1)),

Issuing.createTicket(ProductCode.OneHour),

pause(Duration.ofSeconds(1)),

Issuing.activateTicket,

Issuing.getBarcode

)

val tearDown = scenario("Tear Down").exec(

Setup.reset

)The step implementations are part of Setup and Issuing objects that group related step implementations.

Readable step names help a lot to understand the high-level behavior of a Gatling test quickly.

Let's look at some step implementations in more detail.

The Setup.listProducts step performs an HTTP GET call to list the travel products offered in sales channel 123.

It checks that the response code is 200 OK, so failed requests will show up in the Gatling load test report.

object Issuing {

val listProducts: ChainBuilder = exec(

http("List Products")

.get("/v1/channels/123/products")

.check(status().shouldBe(200))

)

}The createTicket step accepts an enum productCode that specifies which of the two test products in our test fixture

to use. The request body needed for each product is loaded from JSON files.

fun createTicket(productCode: ProductCode): ChainBuilder {

val body = when (productCode) {

OneHour -> RawFileBody("create-one-hour-ticket.json")

Day -> ElFileBody("create-day-ticket.json")

}

return exec(

http("Create Ticket")

.post("/v1/tickets/create")

.body(body).asJson()

.check(

status().shouldBe(200),

jsonPath("$.ticketId").saveAs("ticketId"),

)

)

}The JSON response contains a ticketId property we need in future steps. The property is extracted from the response

using JSON path syntax and saved as a Gatling session attribute.

Session attributes

Each virtual user in Gatling has its own

session

that can contain key-value pairs called session attributes. Each session attribute has a name, and can be substituted in

URLs and ElFileBody data with #{name}.

For example, the activateTicket step that starts the validity period of a 1-hour card uses the ticketId session

attribute in the URL:

val activateTicket: ChainBuilder = exec(

http("Activate Ticket")

.post("/v1/tickets/#{ticketId}/activate")

.check(status().shouldBe(200))

)There are three ways to create session attributes.

- Extract data from a response and use

saveAs(), like theticketIddescribed above. - Set a value directly with

session.set(). For example, this step sets the current date astoday:val today: ChainBuilder = exec { session -> session.set("today", ZonedDateTime.now().toLocalDate()) } - Use a feeder, which is basically an iterator that returns a session attribute. For example:

The step

val timeFeeder = generateSequence { val time = DateTimeFormatter.ISO_OFFSET_DATE_TIME.format(ZonedDateTime.now()) mapOf("now" to time) }.iterator()feed(timeFeeder)would set the session attributenowto the current time.

Session attributes can be used to store state between API calls. By using consistent session attribute names, steps can be combined in a natural way interact with an API.

Running tests

Gatling provides a Maven plugin that

makes it easy to run load tests in a Maven-based project. For example, the following command would run our

IssuingLoadTest:

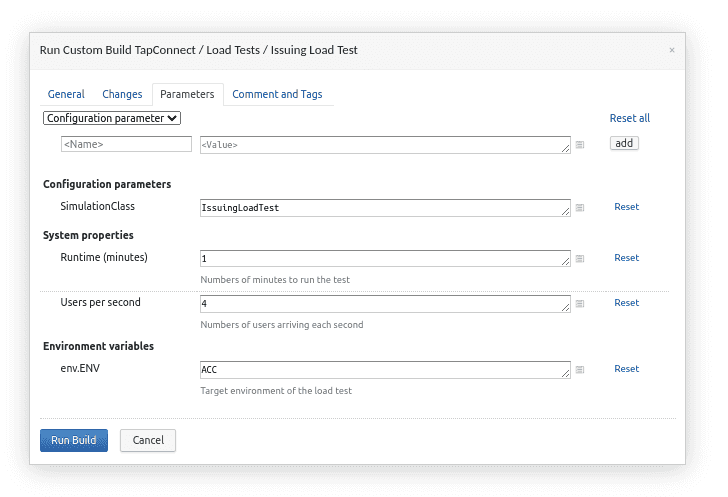

mvn clean gatling:test -Dgatling.simulationClass=IssuingLoadTestWe noticed it's nice to parameterize load tests. For example, a test could read the number of users per second and total run time from system properties and use that to define the traffic pattern.

val usersPerSecond = System.getProperty("usersPerSecond", "4").toDouble()

val runTimeMinutes = System.getProperty("runTimeMinutes", "1").toLong()

val traffic = constantUsersPerSec(usersPerSecond)

.during(Duration.ofMinutes(runTimeMinutes))The test can then be run multiple times with different parameters without changing code all the time.

We run load tests on a dedicated TeamCity build server agent that has all the right OS performance tweaks. Each load test has its own build configuration where each load test parameter has a corresponding TeamCity build parameter. A load test can then be run with a single button click in TeamCity, showing a nice UI to tweak parameters before running the test.

After each run the load test report is saved as a build artifact and shown in a separate report tab on the build results page. When global assertions of a simulation fail the build fails too.

Issuing service performance

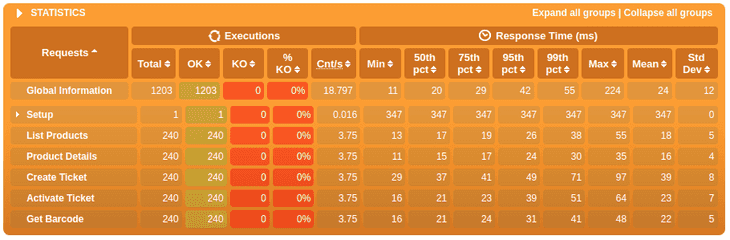

The Issuing load test described in the previous section ran without major issues. Our default setup on AWS could handle the load easily, with mean response times around 40 milliseconds for creating tickets and 20 milliseconds for the other API calls.

For each load test Gatling creates a nice interactive HTML report with detailed graphs. The statistics summary for the Issuing load test is shown below.

Validation load tests

The TapConnect Validation Service should handle 3,400 validators that each scan 25 barcodes per minute.

Let's look at some details of the ValidationLoadTest that verifies these requirements.

The test first reads the number of validators and tap per minutes as system properties, so we can change the generated load easily.

val validators = System.getProperty("validators", "3400").toInt()

val tapsPerMinute = System.getProperty("tapsPerMinute", "25").toInt()The barcodes to scan are pre-generated by another Gatling simulation that calls the Issuing API.

All generated barcodes are written to a CSV file barcodes.csv that looks this:

ticketId,barcode

zvssZzSoXwvuUrg6mqol,I1VUMDEzNjI2VENUMDAx...

z1ULGehfAcKuVGVR5wRN,I1VUMDEzNjI2VENUMTIz...

...The ValidationLoadTest uses a feeder that reads the barcode.csv file again.

val barcodeFeeder = csv("barcodes.csv").circular()Each feed(barcodeFeeder) call sets the session attributes ticketId and barcode (the CSV headers) to the values of

the next row in the CSV file.

The load test scenario of each validator looks as follows:

val validatorActions = scenario("$tapsPerMinute taps/min on $validators validators").exec(

Validation.connect(

exec(

Validation.init,

forever().on(

pace(Duration.ofMillis(60_000L / tapsPerMinute)).exec(

feed(barcodeFeeder),

Validation.tapBarcode,

Validation.notifyBarcode

)

)

)

)

)Each validator creates a WebSocket connection to the Validation Service and initializes it with an init message. Next

follows an endless loop that gets a barcode, sends a tap message to check its validity and then confirms the final

decision in a notify message. Gatling's

pace function

makes sure that we perform this loop at the desired rate of 25 taps per minute.

Using WebSockets in Gatling is slightly different from using REST calls. First you need to create a connection

explicitly, which we modeled in a connect step that accepts a list of actions to execute once the WebSocket connection

has been created.

fun connect(actions: ChainBuilder): ChainBuilder =

exec(

ws("Connect")

.connect("/validate/json/v1")

.onConnected(actions)

)The other steps all send a WebSockets frame and wait for a reply using Gatling's checkTextMessage function. The checks confirm that we got the right response back (similar to checking an HTTP status code) and can be used to extract data from the response.

For example, the tapBarcode step sends a WebSockets frame with the barcode to validate. The regex checks wait for

a response and extract the access property from it. This value can then be used in the notifyBarcode

step to notify TapConnect of the final decision made by the validator.

val tapBarcode: ChainBuilder = group("Tap").on(

exec(

ws("Tap Request")

.sendText(ElFileBody("tap.txt"))

.await(Duration.ofDays(1)).on(

ws.checkTextMessage("Tap Response")

.check(

regex("""(?s)\ntap-response\n.*"access" *: *(true|false)""")

.saveAs("access")

)

)

)

)The performance requirements of the ValidationLoadTest are expressed in the following assertions. Each tap

response should arrive in at most 500 milliseconds and all requests should succeed.

setUp(

// snip

).assertions(

details("Tap", "Tap Response").responseTime().max().lte(500),

global().successfulRequests().percent().shouldBe(100.0)

)The ValidationLoadTest did not succeed directly on our initial AWS environment setup. To investigate the bottleneck

we slowly increased the number of connected validators. We first noticed that the Java heap size grew pretty fast with

the number of connected validators. Using the

AWS CRT client

reduced the heap size significantly. The next bottleneck was the CPU, which maxed out around 800 connected validators.

The solution was more CPU power. More powerful nodes increased throughput, showing we can scale up. More nodes increased

throughput linearly, proving that TapConnect scales out nicely.

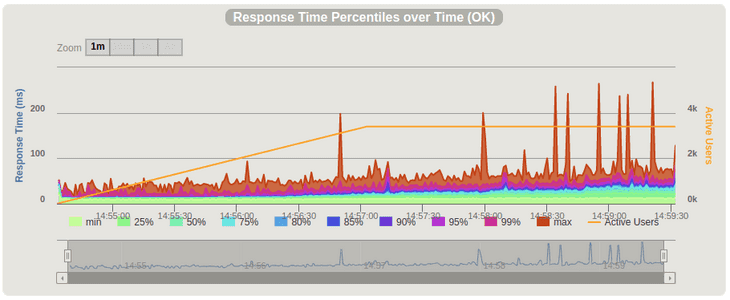

After these tweaks TapConnect could easily handle 3,400 validators at full scanning speed. Taps were processed in a mean time of 21 milliseconds, with 50 milliseconds as 99th percentile and very occasional spikes up to 300 milliseconds. The graph below shows the response time percentiles of tap responses over time.

Subsequent load tests simulated 43,000 validators. TapConnect was able to ingest 4,300,000 notify messages with offline decisions from these validators in half an hour, which was well below the target time of 2 hours max.

Conclusions

TapConnect held up nicely under load, and Gatling was a valuable tool to prove that. Steps as functions formed a nice high-level language to hit the TapConnect APIs. Session variables provided a clean way to let steps built on each other to create realistic scenarios. The interactive report made it enjoyable to analyze results.

More information about TapConnect can be found at https://tapconnect.io.